With a wave of new articles published every day, how is an emergency physician to know how to keep up to date? One EP’s fight against biased literature reviews, followed by an admittedly-biased endorsement

With a wave of new articles published every day, how is an emergency physician to know how to keep up to date? One EP’s fight against biased literature reviews, followed by an admittedly-biased endorsement

The evidence base for clinical effectiveness has become so vast that it is essentially unmanageable for individual providers.”

-Institute of Medicine, 2001

Each day clinicians are bombarded with biomedical information from a variety of internet and snail mail sources, from journals to specialty organizations to proprietary secondary peer-reviewers. To understand how big of a problem this is, consider that there are approximately 2740 MEDLINE-archived biomedical publications appearing each day from 5484 peer-reviewed journals. In addition, the European equivalent of MEDLINE, Excerpta Medica Database (EMBASE), has an additional 1800 unique biomedical journals being archived. In order to consume this information tidal wave, a clinician would need to read 114 manuscripts per hour, 24/7. And that doesn’t even include the “news magazines” like this one, which are not archived in MEDLINE. Forget about seeing patients or generating clinical revenue, you’ve got too much homework to do! What is a busy clinician to do?

Here are four approaches that I’ve contemplated – along with the voices of the inner demon and angel sitting on my opposing shoulders:

1. Ignore everything – easy but irresponsible and a fast-track to practicing obsolete medicine. (Choudhry 2005)

2. Read everything – thorough, but my wife and kids like me to be home sometimes and my Chairman believes that I should actually see patients in the ED.

3. The “Pull” Method – Pull the relevant information to me by conducting focused searches of the literature using free electronic search engines such as PUBMED or the TRIP database. This can be useful, particularly when contemplating problems during office hours or in preparation for lectures/journal club. This technique is less useful when trying to maintain 3.0 patients per hour during the typical ED shift, and there are other resources available (ACP Journal Club, McMaster Plus) or being developed (Arizona Health Sciences) that can be accessed for a fee. However, there is one Achilles heel of Evidence Based Medicine. The available evidence suggests that clinicians do not perform this task well, even in EM. (Nunn 2008, Krause 2011) Search strategies can also be biased, limiting the value of the information found. (Lau 2007)

4. The “Push” Method – Push potentially relevant information towards me in the form of pre-filtered secondary peer reviewed sources such as EM Abstracts, Practical Reviews in EM, Audio Digest, or EM Reports – sometimes useful, often tangential, and usually opinion-based analyses with continued uncertainty about whether all key manuscripts pertinent to YOUR practice were found in the opaque filtering process prior to releasing the product.

Obviously, nobody reads 114 manuscripts per hour. In fact, surveys suggest that the average physician (family practice, internal medicine, surgery) commits approximately 4-hours/week to professional development reading. [Johnson 1997, Saint 2000, Schein 2000] If these busy clinicians pick up the latest copy of the leading peer-reviewed journal in their specialty each week and read it cover-to-cover, are they efficiently accessing the practice-changing research? In 2004, McKibbon evaluated individual articles from 170 core journals using methodological filters from the McMaster University Health Information Research Unit to assess overall quality and risk of bias. The highest quality manuscripts were further assessed by specialty-specific (i.e. emergency care research was evaluated by an EP, cardiovascular research by a Cardiologist, etc.) clinicians for relevance to their practice based upon the following criteria: resource availability, disease prevalence, and what was already known on the topic.

Obviously, nobody reads 114 manuscripts per hour. In fact, surveys suggest that the average physician (family practice, internal medicine, surgery) commits approximately 4-hours/week to professional development reading. [Johnson 1997, Saint 2000, Schein 2000] If these busy clinicians pick up the latest copy of the leading peer-reviewed journal in their specialty each week and read it cover-to-cover, are they efficiently accessing the practice-changing research? In 2004, McKibbon evaluated individual articles from 170 core journals using methodological filters from the McMaster University Health Information Research Unit to assess overall quality and risk of bias. The highest quality manuscripts were further assessed by specialty-specific (i.e. emergency care research was evaluated by an EP, cardiovascular research by a Cardiologist, etc.) clinicians for relevance to their practice based upon the following criteria: resource availability, disease prevalence, and what was already known on the topic.

McKibbon’s group reported some surprising results. By measuring the ratio of the total number of articles for each journal divided by number of “relevant articles” (using the criteria above), they computed the “Number Needed to Read” (NNR) for each journal. The only EM journal with any articles deemed worthy of assessment was the Annals of Emergency Medicine and the NNR was 147!

In other words, an EP must read 147 Annals articles to find one that should change or verify practice for most clinicians in our specialty. By comparison, the New England Journal of Medicine’s NNR was 61 and the Journal of the American Medical Association’s was 77. Since EPs care for anybody, anywhere, and anytime, a substantial proportion of research relevant to our practice appears in non-EM journals. So, even more so than with other specialties, the breadth of our reading is overwhelming. The broad-based knowledge base of EM is probably related to the proliferation of secondary peer-reviewed journals and EBM expertise within our specialty.

One can quibble about McKibbon’s quantitative estimates of information overload ad nauseum. For example, the journals will argue that they are the foundation of the scientific process, that the building blocks of experimentation and discovery are the preliminary reports and hypothesis-driving, inconclusive findings of early research reports that are not ready for “prime time.” Or one could contend that the process used by McKibbon is biased since the Health Information Research Unit pre-filtering process is not exactly transparent or easily reproduced. However, none of these counterarguments helps the busy EP to weed through the daily deluge of research to find that rare pearl of truth.

What is the solution? Secondary peer-reviewed products including those listed above are abundant. They can be valuable allies to busy clinicians by weeding through hundreds of journals to find, appraise, and disseminate essential nuggets of practice-changing or practice-enhancing information. In rating these products, physician consumers should assess the transparency and methodological rigor of the pre-filtering process since these details will impact the articles selected and those never seen. Specifically,

- Who selects the articles that will be abstracted?

- What are the biases of those individual(s) and their individual expertise?

- Does the perspective of the individual(s) selecting the evidence represent your clinical priorities, hospital setting, and patient population?

- Are the methods employed to find the evidence subjective and variable or systematic and reproducible?

If your answer to any of these questions is, “I don’t know,” then you cannot be confident in the bias-free inclusiveness of the filtered information that you are being provided. Most of the secondary peer-review sources are not explicit about the article selection process. By not engaging the end-users in the selection process, these resources are essentially engaging authoritarian dictate, which is problematic on several levels including propagation of unsubstantiated dogma via individual, opinion leader biases and limited external validity to practitioners in settings dissimilar from those of the “expert.” Surprisingly, over the last 30-years those secondary peer review sources that do not engage their audience in the filtering process have never attempted to evaluate their methods using the same scientific principles that they espouse for the medical literature.

Sp

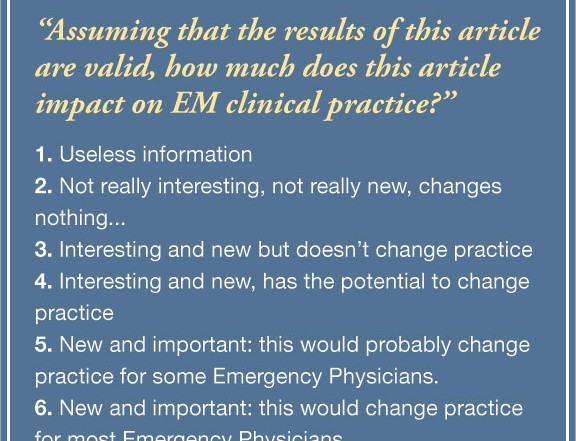

eaking of bias, I’m here to suggest that there is an exception to the rule, one which I not only recommend, but help produce. In November 2011, the BEEM group published a reliability assessment of the BEEM Rater Tool (Box). When used to rate potentially EM-relevant research manuscripts, this simple instrument was found to be highly reproducible (inter-rater reliability = 0.90-0.92) and this reliability could be attained with only 12 raters. The raters were 134 EP’s from the U.S., U.K., Canada, and Australia representing academic and community settings. Using the BEEM Rater Tool, secondary peer review sources can now begin to filter the evidence using the perspectives of the end-users confident that the raters’ assessments are reliable, if not accurate. The next step for the BEEM group will be to validate the accuracy of this instrument against an acceptable criterion standard. Stay tuned as this validation is conducted. For now, the bottom line is that the busy EP has ample access to resources to find and interpret the latest practice-enhancing research findings, but make sure that your information source is working to systematically minimize selection bias while maximizing the yield.

Christopher R. Carpenter, MD, MSc, is an assistant professor of emergency medicine and the director of evidence based medicine at Washington University in St. Louis. He is also on faculty with Best Evidence in Emergency Medicine (BEEM).

6 Comments

Why did you stop writing for the last year?

Writing grants, book, and starting new Evidence Based Diagnostics series in Academic Emergency Medicine left too few hours in the day. I’m always glad to have the opportunity to offer opinions in EPM, though.

How can you help?

A year and a half ago I wanted to switch to a phd in EBHP. Is a concentration in epidemiology or public health close?

This can be useful, particularly when contemplating problems during office hours or in preparation for lectures/journal club.

Each day clinicians are bombarded with biomedical information from a variety of internet and snail mail sources, from journals to specialty organizations to proprietary secondary peer-reviewers. Thanks!